🗓️️ Week 19 TLDR: Making gentest great

I snapped out of my research to do some coding. Partly because I missed coding more and partly because I need some time to think about the conclusion for the research.

EVM research: Proposing solutions

The analysis on mainnet memory usage has received a largely positive response. Mario Vega (from EEST) notes:

"Very good report, it has some interesting tidbits I didn't expect, like the tx that used CODECOPY to expand memory. I assume it's a contract that uses its own code as storage or a factory contract. If you could add some conclusions to the report, it would be great IMO."

Thomas (from EF Robust Incentive Group) agrees that I need a formal conclusion:

"I think it would be very valuable to write a doc with a summary of the most interesting findings, and then motivate what you think changes in pricing should be (or highlight several options/proposals, with their pros and cons)."

This reassures me that I'm somewhat moving in the right direction. I don’t have a narrative that explains what cheaper memory could unlock. Once that is thought through, I see a few ways to achieve it:

-

Reduce the pricing for memory accessing opcodes: Currently around 3 gas.

-

Increase the limit of linear memory expansion: Currently at 704 bytes.

-

Tone down the memory expansion penalty: This is defined by the equation:

Where is the words of memory expanded. Memory is expanded in multiples of EVM word size (32 bytes). For example, if a word is written to offset 3, the size of expanded memory is (rounded up).

Each parameter has its own pros and cons, and possibly a composite solution could work better. I will have more to say soon ™️!

Making gentest great

The EEST has a component called gentest. It is a command that lets you create dynamic tests from a mainnet transaction.

In essence, it verifies that a transaction in the wild does indeed follow the specs defined by the Ethereum protocol.

Currently, gentest has some limitations; for example, it only supports type 1 transactions. My goal is to

make gentest great.

gentest connects to an RPC node to generate tests. However, the config file requires support for a custom header to connect to my RPC provider.

I initially planned to add support for custom headers.

Throughout this process, Dan and I debated migrating from JSON to YAML. Our discussions revealed that managing various configurations is a fundamental requirement of the project.

Long story short, I ended up creating a full-fledged configuration manager for EEST, which lets you add any configuration across the project easily.

Next, I migrated the templating logic from Python strings to Jinja2 — a popular templating engine.

I have been thinking about how gentest can be made fundamentally better and have

proposed a revised architecture for gentest.

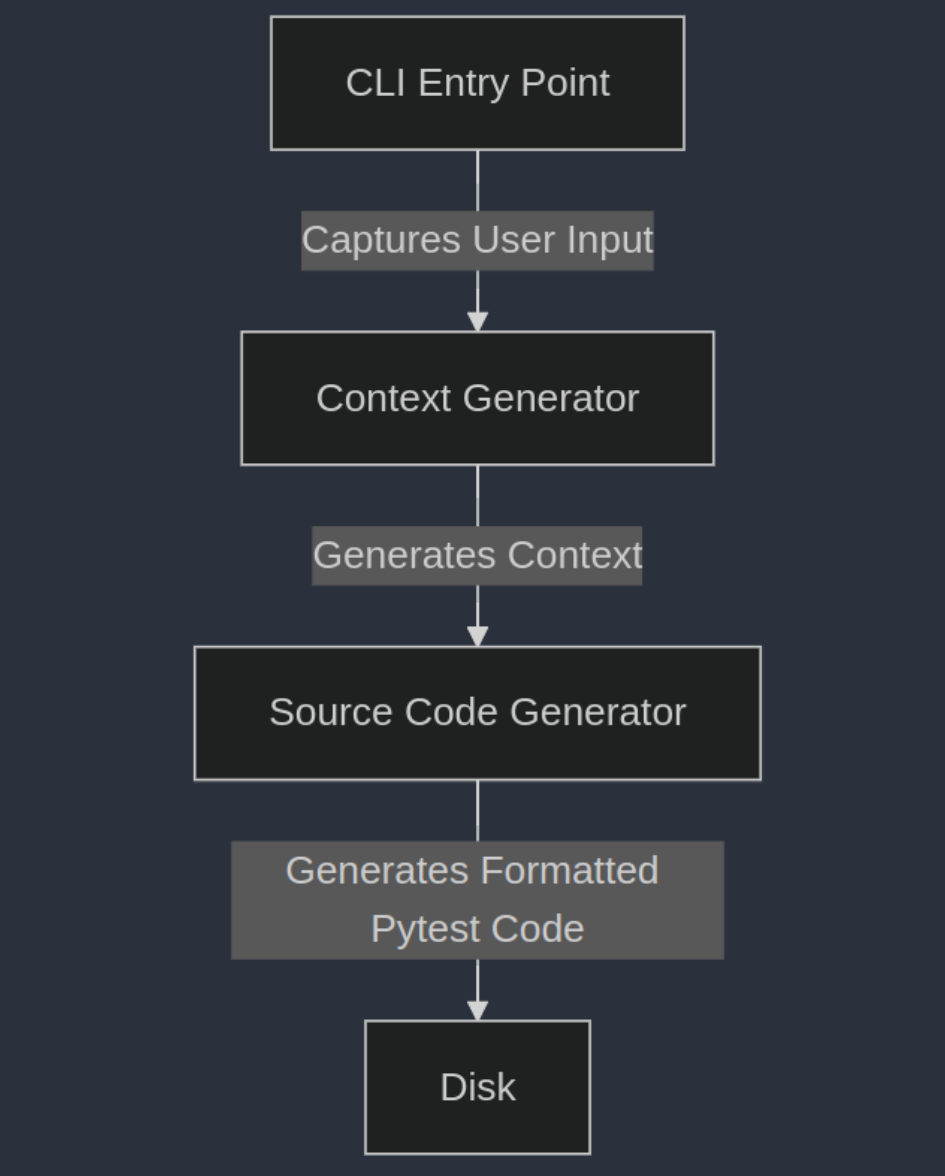

The Gentest module generates a test script in three steps:

- The CLI entry point captures the user input required for generating the test.

- This input is used to generate additional context required for creating tests.

- Finally, using this context, a formatted pytest source code is generated and written to disk.

The process of generating context and rendering templates will share the same logic across different kinds of tests. These could be created as modules: (CLI, Test Provider, and Source Code Generator).

I hope that this effort will reduce the friction of creating new kinds of gentest tests.

Some thoughts on EthDebug format

The EthDebug working group seeks to design a debugging data format for EVM languages. This format will standardize the interface between compilers and debuggers. I was involved in the project before getting busy this fellowship. This week I joined their community call and caught up with the latest updates.

This group closely works with the Solidity team to build support for it in the Solidity compiler. I have some thoughts on the pointer schema design that I hope to write more about.

Reviewing EthShadow

I am also learning more about shadow simulator. I have volunteered to review Daniel's documentation for his ethshadow tool. This is mostly an excuse for me to learn more about his excellent tool and network simulations in general.