🗓️ Week 11

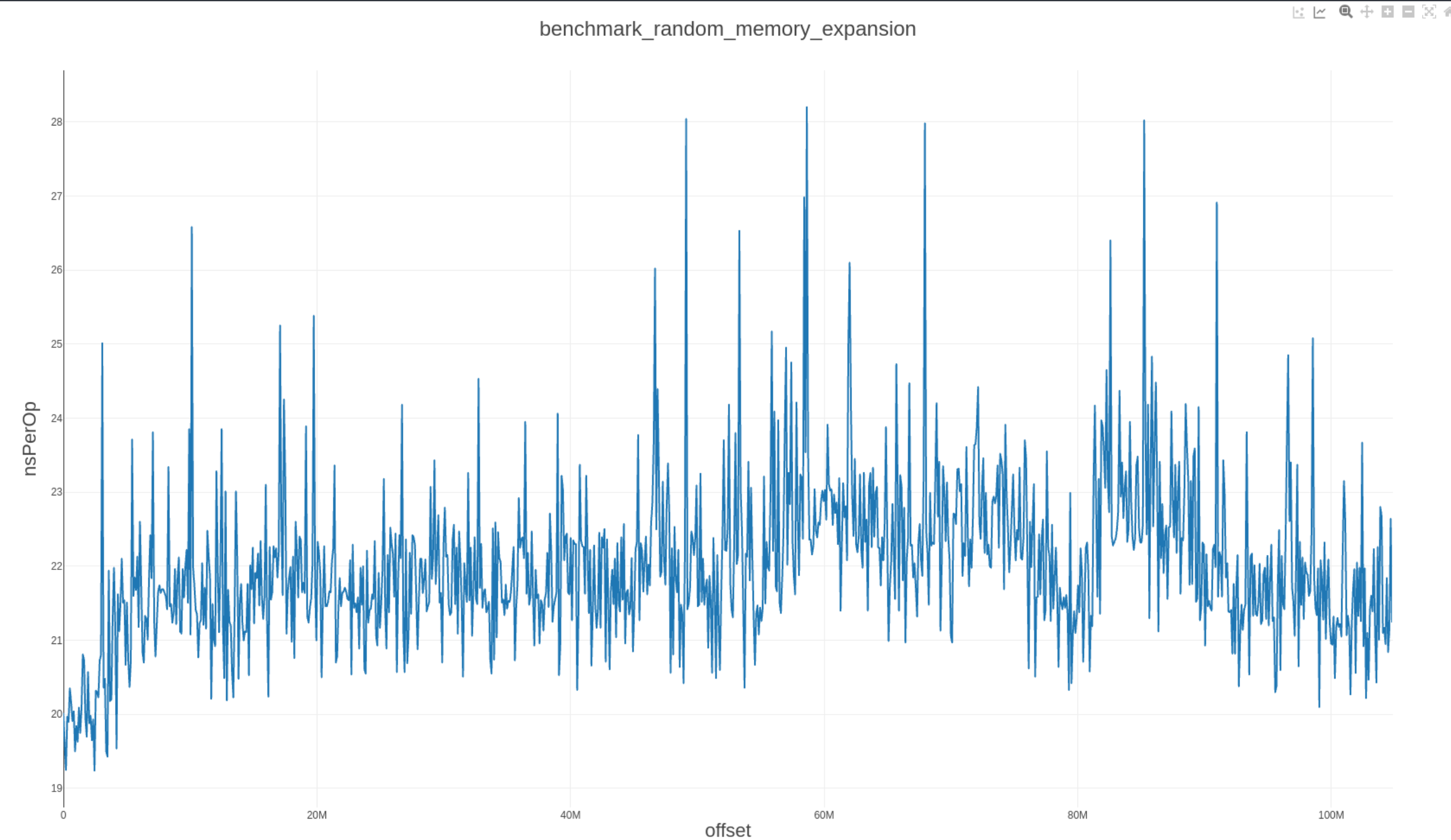

This week builds on the work done previously on memory expansion. The previous benchmark expanded memory with zero bytes. I modified the code to fill memory with random bytes. The goal was to determine whether copying non-zero bytes requires more resources.

Memory expansion with random bytes

We introduce generateRandomBytes which expands the memory with random bytes and then writes to offset ranging ranging from 1KB to 100MB using a node.js script.

var memStartFlag uint64

func init() {

flag.Uint64Var(&memStartFlag, "memStart", 0x0, "Memory start value")

}

...

func BenchmarkOpMstore(bench *testing.B) {

var (

env = NewEVM(BlockContext{}, TxContext{}, nil, params.TestChainConfig, Config{})

stack = newstack()

mem = NewMemory()

evmInterpreter = NewEVMInterpreter(env)

)

env.interpreter = evmInterpreter

// Fill memory with random bytes

mem.store,_ = generateRandomBytes(memStartFlag+32)

pc := uint64(0)

memStart := new(uint256.Int).SetUint64(memStartFlag)

v := "abcdef00000000000000abba000000000deaf000000c0de00100000000133700"

value := new(uint256.Int).SetBytes(common.Hex2Bytes(v))

bench.ResetTimer()

for i := 0; i < bench.N; i++ {

stack.push(value)

stack.push(memStart)

opMstore(&pc, evmInterpreter, &ScopeContext{mem, stack, nil})

}

}

func generateRandomBytes(length uint64) ([]byte, error) {

// Create a byte slice of the specified length

bytes := make([]byte, length)

// Fill the byte slice with random bytes

_, err := rand.Read(bytes)

if err != nil {

return nil, err

}

return bytes, nil

}

The graph of the result shows that the write operation, like zero-byte expansion, is not particularly expensive.

Benchmarking mainnet

I have set up a local Geth node to analyze trends in memory consumption on the mainnet. I expect to have the results by next week.

Understanding solc memory usage

The Solidity compiler would greatly benefit from repricing EVM memory. I spent time understanding how solc currently uses memory. Daniel from the solc team proposes splitting memory into pages, with each page costing the same amount of gas:

I mean that there's separate larger disjoint regions for which the pricing is equivalent. E.g. you could say that memory is split into 256 pages (identified by the most significant 8 bits of the offsert) - and that (without prior memory usage) mstore(0, 42) has the same cost as mstore(0x010000000...0, 42). That'd be very large pages - it could also be very small pages - as in memory is allocated in 4096 byte chunks and you only pay for each chunk, but independently of their position. But that probably has worse properties in disincentivising using large amounts of memory.

Next steps

- Finish mainnet analysis.

- Continue understanding solc memory usage.

📞 Calls

- Aug 19, 2024 - EPF Standup #10

- Aug 20, 2024 - AMA with lightclient.

- Aug 22, 2024 - EthDebug community call.